Trying out a new format this week. Instead of links, here’s some shorts. Too little for a post, too much for a link. This week we have

Power variation in AI data centres

(Link requires registration and full article isn’t free, but there’s a massive preview which is all I have access to)

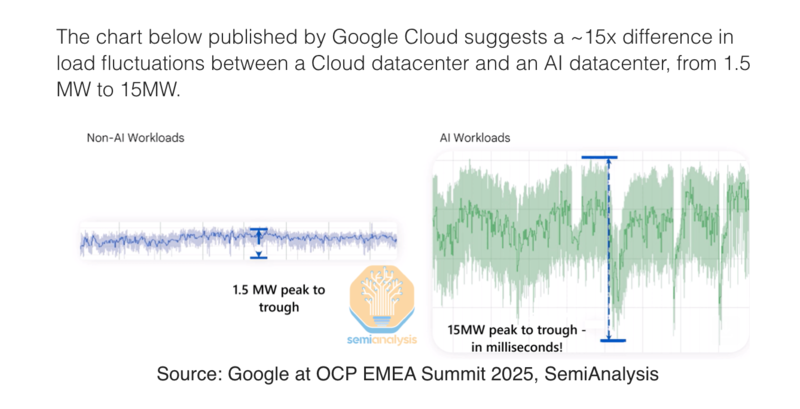

A new to me issue with AI workloads - training runs are very, very spiky in their power use.

Google have released this graph

and Meta have this in the Llama 3 paper

During training, tens of thousands of GPUs may increase or decrease power consumption at the same time, for example, due to all GPUs waiting for checkpointing or collective communications to finish, or the startup or shutdown of the entire training job. When this happens, it can result in instant fluctuations of power consumption across the datacenter on the order of tens of megawatts, stretching the limits of the power grid. This is an ongoing challenge for us as we scale training for future, even larger Llama models.

So companies are looking at massive battery banks to smooth the grid load

Adopting new CLI tools

I’ve been using a linux/posix cli for a very long time. But every so often it’s good to change it up. In the last six months I’ve

-

Changed from iTerm2 to Ghostty. I was skeptical that I’d notice any difference, but it does actually feel noticably faster

-

Changed from powerline prompts to Starship. No new functionality, but it’s quicker

-

Adopted fzf and integrated it with zsh so now file completion and history have fuzzy matching

The combined effect is to make things feel fresh, new and faster

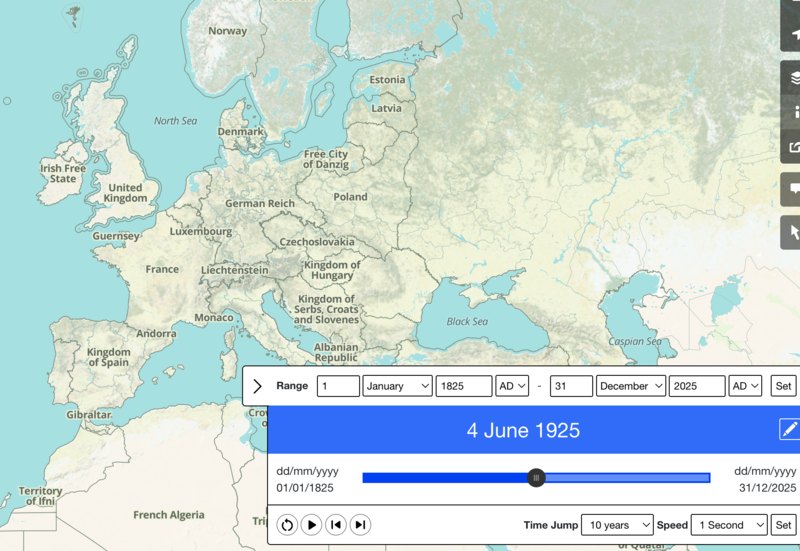

Historical maps

Open Street Map based, but with borders, railways etc over time