Shorts, June 2026

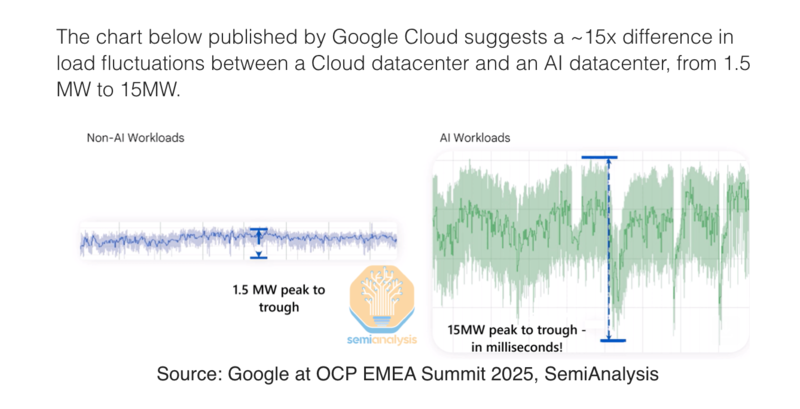

Trying out a new format this week. Instead of links, here’s some shorts. Too little for a post, too much for a link. This week we have Power variation in AI data centres Adopting new CLI tools Historical maps Power variation in AI data centres (Link requires registration and full article isn’t free, but there’s a massive preview which is all I have access to) A new to me issue with AI workloads - training runs are very, very spiky in their power use. ...